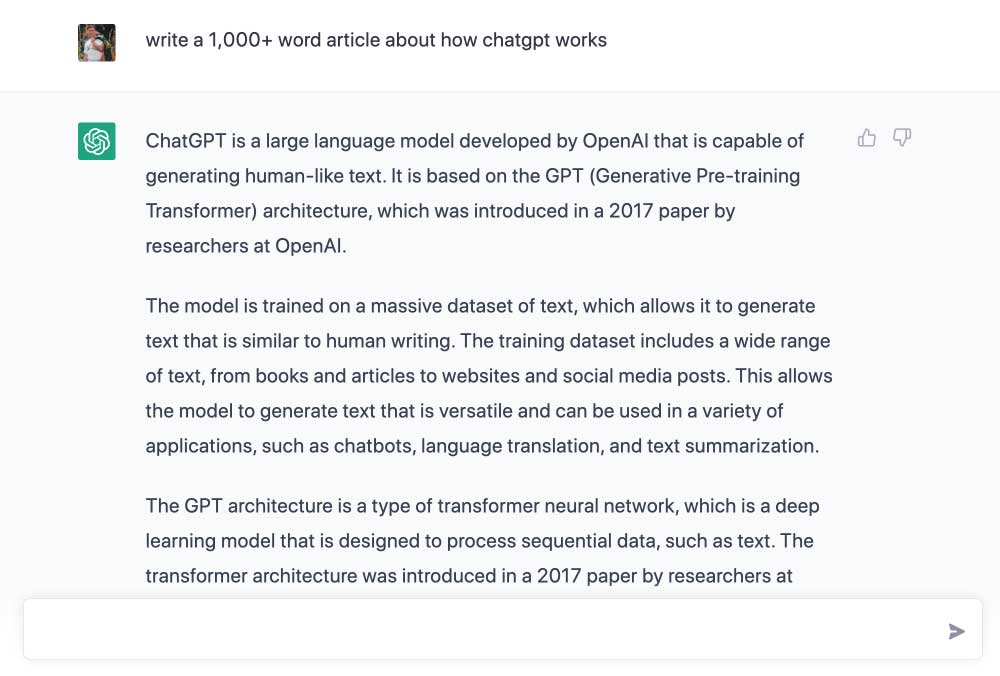

Quick Note: This article was not really written by me. It was created by the very thing that the article is about: ChatGPT. As you can see in the image below, I asked ChatGPT to write a 1,000+ word article about how ChatGPT works.

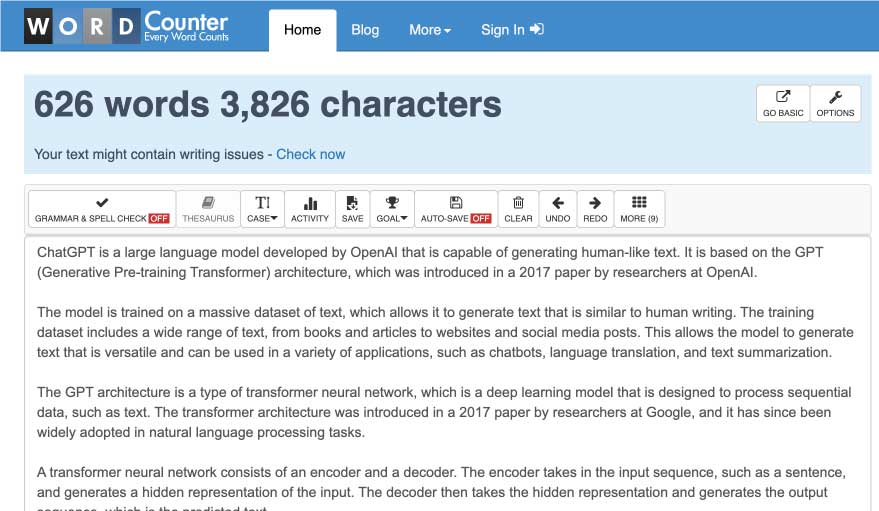

On the first try, ChatGPT only came up with 626 words. I then asked it to expand out its description to be at least 1,000 words. It came close with just over 900 words. On the third try, ChatGPT came out with guns blazing, hitting 1,639 words.

Nice work!

Everything in this article below the line here was done by ChatGPT. How do you think it did? Let me know.

ChatGPT is a powerful language model that is capable of generating human-like text. It is based on the GPT (Generative Pre-training Transformer) architecture, which was introduced in a 2017 paper by researchers at OpenAI. The model is trained on a massive dataset of text, which allows it to generate text that is similar to human writing. This makes it a useful tool for a wide range of natural language processing (NLP) tasks, such as chatbots, language translation, and text summarization.

The GPT architecture is a type of transformer neural network, which is a deep learning model that is designed to process sequential data, such as text. The transformer architecture was introduced in a 2017 paper by researchers at Google, and it has since been widely adopted in NLP tasks. A transformer neural network consists of an encoder and a decoder. The encoder takes in the input sequence, such as a sentence, and generates a hidden representation of the input. The decoder then takes the hidden representation and generates the output sequence, which is the predicted text.

The key innovation of the transformer architecture is the attention mechanism, which allows the model to focus on specific parts of the input sequence when generating the output. This is important because it allows the model to handle input sequences of varying lengths and to understand the relationships between different parts of the input. In the GPT model, the attention mechanism is further enhanced by the use of multi-head attention, which allows the model to attend to multiple parts of the input sequence at the same time.

ChatGPT is pre-trained on a massive dataset, which allows it to generate text that is similar to human writing. The pre-training process involves training the model on a large dataset of text, such as books and articles, for a long period of time. This allows the model to learn the patterns and structure of human language, which is necessary for generating text that is similar to human writing. The pre-training process also allows the model to learn the long-term dependencies present in the language, which is essential for generating coherent and fluent text.

Once the model is pre-trained, it can be fine-tuned on a smaller dataset for a specific task, such as language translation or text summarization. Fine-tuning involves training the model on a smaller dataset for a shorter period of time, which allows it to learn the specific patterns and structure of the task at hand. This makes the model more accurate and efficient for the specific task.

One of the main advantages of ChatGPT is its ability to generate text that is similar to human writing. This is because it is trained on a massive dataset of text, which allows it to learn the patterns and structure of human language. Additionally, the transformer architecture and attention mechanism allow the model to understand the relationships between different parts of the input, which is important for generating coherent and fluent text. The use of multi-head attention in the GPT model further enhances the ability of the model to understand the input and generate human-like text.

Another advantage of ChatGPT is its ability to be fine-tuned for specific tasks. This allows the model to learn the specific patterns and structure of the task at hand, which makes it more accurate and efficient for the task. This is particularly useful for NLP tasks that require a high degree of accuracy, such as language translation or text summarization.

In addition to its ability to generate human-like text, ChatGPT also has the ability to perform other NLP tasks such as question answering, conversation generation, text completion and more. This is because the model has been trained on a diverse dataset of text, which allows it to understand the context and relationships between different words and phrases. This makes it well-suited for tasks that require a deep understanding of the input, such as question answering or conversation generation.

One of the most popular uses of ChatGPT is in chatbot applications. Chatbots are computer programs that can simulate human conversation, and they are becoming increasingly popular in customer service, e-commerce, and other industries. ChatGPT can be fine-tuned on a dataset of conversational data, which allows it to understand the context and flow of a conversation. This makes it well-suited for chatbot applications that require a high degree of natural language understanding.

Another application of ChatGPT is in text completion, where the model can generate text based on a given prompt. This can be used for generating summaries of long articles, completing sentences or paragraphs, and more. The model can also be fine-tuned on specific types of data to generate text that is specific to a particular domain, such as legal documents or scientific papers.

In conclusion, ChatGPT is a powerful language model that is capable of generating human-like text. It is based on the GPT architecture and is pre-trained on a massive dataset of text, which allows it to learn the patterns and structure of human language. The model can be fine-tuned for specific tasks, which makes it more accurate and efficient for the task. ChatGPT can be used for a wide range of NLP tasks, such as chatbots, language translation, text summarization, question answering, conversation generation and text completion. With the constant advancement of technology and the increasing amount of data available for training, the capabilities of ChatGPT and other language models will continue to improve in the future.

One of the key features of ChatGPT is its ability to generate text that is similar to human writing. This is because it is trained on a massive dataset of text that includes a wide range of writing styles and formats. This allows the model to learn the patterns and structure of human language, including grammar, syntax, and vocabulary. Additionally, the transformer architecture and attention mechanism allow the model to understand the relationships between different parts of the input, which is important for generating coherent and fluent text.

Another advantage of ChatGPT is its ability to be fine-tuned for specific tasks. This allows the model to learn the specific patterns and structure of the task at hand, which makes it more accurate and efficient for the task. For example, fine-tuning ChatGPT on a dataset of customer service interactions can make it better suited for chatbot applications that require a high degree of natural language understanding.

One of the most popular applications of ChatGPT is in chatbot development. Chatbots are computer programs that can simulate human conversation and are used in a variety of industries, such as customer service, e-commerce, and more. ChatGPT can be fine-tuned on a dataset of conversational data, which allows it to understand the context and flow of a conversation. This makes it well-suited for chatbot applications that require a high degree of natural language understanding.

Another application of ChatGPT is in text summarization. Text summarization is the process of generating a condensed version of a text document that retains the main ideas and information. ChatGPT can be fine-tuned on a dataset of news articles or other text documents to generate summaries that are similar to those written by humans. This can be useful in a variety of settings, such as news aggregators or content curation platforms.

ChatGPT is also useful for language translation tasks. By fine-tuning the model on a dataset of bilingual text, it can learn to translate between languages with a high degree of accuracy. Additionally, ChatGPT can be fine-tuned on a dataset of specific domain-specific documents, such as legal or scientific papers, which can be useful for generating translations that are specific to a particular field.

Another application of ChatGPT is in text completion. Text completion is the process of generating text based on a given prompt, such as completing a sentence or a paragraph. ChatGPT can generate text that is coherent and fluent, which makes it well-suited for this task. Additionally, the model can be fine-tuned on specific types of data to generate text that is specific to a particular domain, such as legal documents or scientific papers.

In conclusion, ChatGPT is a powerful language model that has a wide range of applications in natural language processing (NLP) tasks. Its ability to generate text that is similar to human writing, and its ability to be fine-tuned for specific tasks, makes it a valuable tool for a variety of industries and applications. Some of the most popular applications of ChatGPT include chatbot development, text summarization, language translation, and text completion.

One of the key features of ChatGPT is its ability to generate text that is similar to human writing, which is made possible by its pre-training on a massive dataset of text. Additionally, the transformer architecture and attention mechanism allow the model to understand the relationships between different parts of the input, which is important for generating coherent and fluent text.

Moreover, the flexibility of the model is another advantage that makes it a valuable tool, it can be fine-tuned on specific tasks and specific domains, this allows the model to specialize in certain task and improve its performance.

As technology and the availability of data continue to advance, it’s expected that the capabilities of ChatGPT and other language models will continue to improve in the future. This will open up new possibilities for NLP tasks and applications, and make it even more valuable for industries and businesses that rely on natural language understanding and generation.

In summary, ChatGPT is a powerful language model that has the ability to generate human-like text, it can be fine-tuned for specific tasks, and it has a wide range of applications in NLP tasks. As technology and data continue to advance, the capabilities of ChatGPT and other language models will continue to improve, which will open up new possibilities for NLP tasks and applications in the future.