In today’s data-driven world, the management, transportation, and processing of vast amounts of real-time data have become pivotal for many businesses. Kafka, an open-source distributed event streaming platform, has emerged as a favorite for handling real-time data feeds. Developed originally by LinkedIn and later donated to the Apache Software Foundation, Kafka is designed to process trillions of events a day, offering powerful stream processing capabilities, durability, and fault-tolerance.

Kafka operates fundamentally on the “publish-subscribe” model. Producers push data to topics (data categories), and consumers pull data from these topics. Multiple producers and consumers can simultaneously publish and read messages to and from a topic. Moreover, Kafka seamlessly scales horizontally by allowing the distribution of data and load over many servers without incurring downtime.

Apart from merely being a message broker, Kafka has matured to support stream processing, which allows the transformation of data streams using its internal Streams API. This has enabled the creation of real-time analytics and monitoring dashboards, making Kafka a holistic streaming platform.

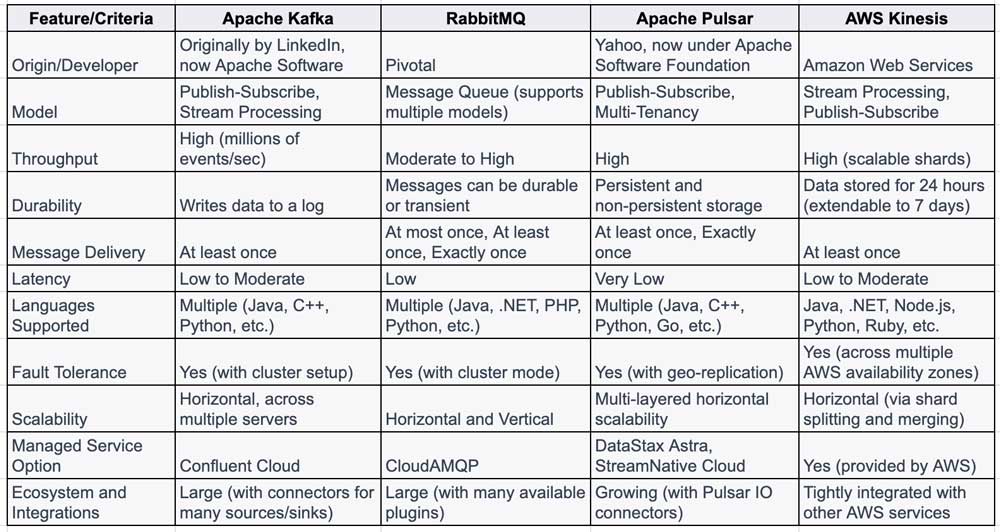

Apache Kafka does many of the same things as several other tools in the message queue/broker category. The chart below compares Kafka to several other alternatives, including RabbitMQ, Apache Pulsar, and AWS Kinesis.

tar -xzf kafka_2.x-x.x.x.tgz cd kafka_2.x-x.x.xbin/zookeeper-server-start.sh config/zookeeper.propertiesbin/kafka-server-start.sh config/server.propertiesbin/kafka-topics.sh --create --topic my-topic --bootstrap-server localhost:9092bin/kafka-console-producer.sh --topic my-topic --bootstrap-server localhost:9092 In a new terminal, start a consumer to read these messages:bashCopy codebin/kafka-console-consumer.sh --topic my-topic --from-beginning --bootstrap-server localhost:9092In conclusion, Kafka’s robustness, scalability, and versatility have made it an integral part of the tech stack for organizations that want to handle streaming data efficiently. From big names like Netflix and Uber to startups, many rely on Kafka for their real-time data processing needs. As you delve deeper into Kafka, you’ll discover its transformative potential in the realm of data streaming.